Defense

Defense

There’s lots of talk from some people about how AI is going to destroy the world and needs to be regulated, like some kind of SkyNet has been created… Well I thought, aside from this being largely not reality, I would look into a history of ‘AI’ being used in cyber defence.

I’ve used GROK to create this table:

| Year | Technology/AI Type | Description/Use in Defensive Cybersecurity | Key Developers/Examples |

|---|---|---|---|

| 1986–1987 | Rule-Based Systems & Early Anomaly Detection | Early foundational work on rule-based expert systems for detecting unauthorized access and intrusions in computer networks. These systems used predefined rules to flag deviations from normal behavior, laying the groundwork for modern intrusion detection systems (IDS). | Dorothy E. Denning (pioneering research on statistical models for anomaly detection in audit records, with key publication in February 1987). |

| 1987 | Unsupervised Machine Learning | Development of anomaly-based IDS using unsupervised learning to identify abnormal patterns in network traffic without labeled data, enabling binary decisions (normal vs. abnormal) for threat alerting. This addressed emerging cyber threats in networked environments. | D. E. Denning’s “An Intrusion-Detection Model” (IEEE paper, published February 1987); early IDS prototypes. |

| Late 1980s | Machine Learning in Threat Hunting | Initial implementation of ML algorithms to automate threat detection and intrusion identification, though limited by data availability. Focused on anomaly detection to reduce manual monitoring. | Researchers in academia and government; precursors to operational ML-based security tools. |

| 1998–1999 | Supervised & Unsupervised ML Benchmarks | Creation of benchmark datasets for evaluating ML methods in cybersecurity, including supervised learning for threat classification and unsupervised for anomaly detection. This spurred research but yielded few immediate operational products due to high false positives. | DARPA (Defense Advanced Research Projects Agency); initiatives for ML in security problems like intrusion detection, including the 1998-1999 Intrusion Detection Evaluation Program. |

| 2000 | Supervised Machine Learning | Deployment of ML for spam, phishing, and malicious URL filtering in emails, using labeled datasets to classify threats and block harmful content. This became a staple in email security. | Developers at major providers (e.g., precursors to Gmail’s ML filters); supervised algorithms for pattern matching. |

| Early 2000s | Supervised ML with Big Data | Expansion of datasets enabled more sophisticated ML models for real-time threat detection, including behavioral analysis to identify malware beyond signatures. | Research community; integration into anti-virus and filtering systems. |

| 2010s | Behavioral Analytics & Next-Gen Anti-Virus | Use of ML for user and entity behavior analytics (UEBA) to detect insider threats and anomalies; next-generation anti-virus shifted from signatures to behavioral ML for malware detection. | Splunk (UEBA tools), Darktrace (AI-driven anomaly detection, founded 2013), CrowdStrike and Palo Alto Networks (ML for threat detection). |

| 2012 | Supervised ML in Anti-Virus | Introduction of next-gen anti-virus systems using supervised ML on non-signature data like traffic behavior to detect and block malware. | Cylance (pioneering ML-based endpoint protection, founded 2012). |

| Mid-2010s | Deep Learning & Neural Networks | Adoption of deep neural networks (e.g., RNNs, LSTMs) for complex pattern recognition in network traffic, enabling advanced intrusion detection and botnet identification. | Research from Springer and others; applied in IDS for zero-day threats. |

| 2017 | Transformer Models | Introduction of transformers for parallel processing in NLP tasks, applied defensively for log analysis, threat intelligence parsing, and anomaly detection in cybersecurity. | Google (Vaswani et al., “Attention Is All You Need”); foundational for later LLMs in defensive tools. |

| 2018–2020 | Encoder-Only LLMs & RNN Variants | Use of models like BERT and ELECTRA for threat intelligence, malware classification, and intrusion detection; LSTMs/GRUs for sequence-based anomaly detection in logs and traffic. | Google (BERT in 2018, ELECTRA in 2020); applications in IDS (e.g., RNN-ID, LSTM-based systems). |

| 2021–2022 | Early LLMs in Malware & Vuln Detection | LLMs like CANINE/XLNet for malware deobfuscation and classification; datasets like BigVul/CVEfixes for training ML on vulnerabilities; hybrid ML for forensics and threat intel. | Various researchers; CommitBART for commit analysis, PatchRNN for secret patches. |

| 2023 | Generative AI & Advanced LLMs | Integration of generative AI for synthetic data generation, predictive modeling, and automated incident response; LLMs like GPT-4, LLaMA, Falcon for vulnerability detection, phishing simulation, and security training. | OpenAI (GPT-4, March 2023), Meta (LLaMA, February 2023), TII (Falcon, May 2023); tools like Charlotte AI (CrowdStrike, released May 2023) for SOC automation. |

| 2023–2024 | Multimodal LLMs & Hybrid Approaches | Advancements in models like GPT-4o, Mixtral-8x7B, DeepSeek-V2 for real-time threat detection, code vulnerability repair (with varying success rates in hardware bugs), and fuzzing (e.g., ChatAFL discovering 9 vulnerabilities). | OpenAI (GPT-4o, May 2024), Mistral AI (Mixtral-8x7B, December 2023), DeepSeek (DeepSeek-V2, May 2024); CyBER-Tuned (released 2023–2024) for domain-specific threats, Pulse (published August 2024) for zero-day ransomware; LATTE (published October 2023) for binary analysis discovering 37 bugs. |

| 2024–2025 | Agentic LLMs & Specialized Models | Emerging use of LLMs for smart contract security, binary analysis, and hardware security; focus on fine-tuning with RAG/DPO for adaptive defenses against evolving threats. Includes agentic enhancements for SOC automation. | IBM (Granite Code, May 2024), Microsoft (Phi-3, April 2024), Google (CodeGemma, April 2024); PLLM-CS (published May 2024, with 100% accuracy in intrusion detection on UNSW_NB 15 dataset); CrowdStrike (Fall 2025 release of Charlotte AI AgentWorks for agentic SOC). |

It’s funny, GROK didn’t mention itself.

Are we doomed?

Some people are presenting the idea that AI is going to give attackers such a major advantage over defenders. So what’s new? If you ignore AI completely let’s consider this:

Attackers have a one (or few) to many attacker advantage

Attackers don’t have to largely care who they breach, they just attack millions of targets and hope they get in somewhere.

Attackers typically break up the kill chain and have an ecosystem e.g. Initial Access, Initial Access Brokers, Ransomware Deployment, Negotiations, Cleaning Money etc.

Defenders (and most orgs don’t really have a defensive team per say, this is left to the IT team to handle typically without the resources & support to do so) have to contend with:

- Agile and Nimble Attackers

- Organisations that are slow to change, act or make decisions

- Organisations that don’t provide adequate funding, resource, training or support

The idea that AI is changing the status quo to me seems odd. I’ve got friends building AI assisted attack platforms, they are super cool but still going to run into the same challenges attackers have today:

- It’s not that easy to break into an org. You don’t just say: I want to hack X org and it magically be possible.

People seem to have a very dramatic and Hollywood like view of this.

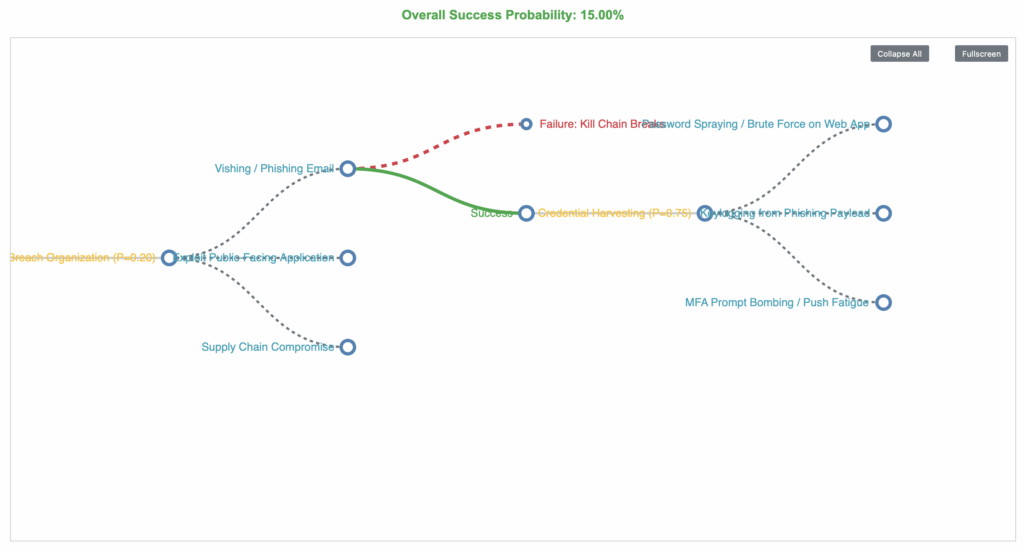

I’m trying to build some probability chains to demonstrate this. (work in progress).

Hacking / Attacking is not magic, you can’t just on a dime say Hack and then suddenly have root on a domain controller. Offensive Cyber operations take time, planning etc. (legal ones).

Hacking into networks (illegally) also takes time and a range of variables have to line up for a kill chain to be successful.

I think people would do better with more science and less hype.. but hey what do I know! I’m running back to do more AI enabled defensive work! (that means offensive as well but it sounds nicer the other way round)