Leadership

Leadership

Do they replace the need for OSINT and Supplier engagement?

I’ve been conducting sales and assurance-based activities for some while (I’m not counting it will make me feel old!) and I have started looked at a range of supplier management tools which leverage tool-based OSINT, attack surface mapping and manual data inputs and I have to say this:

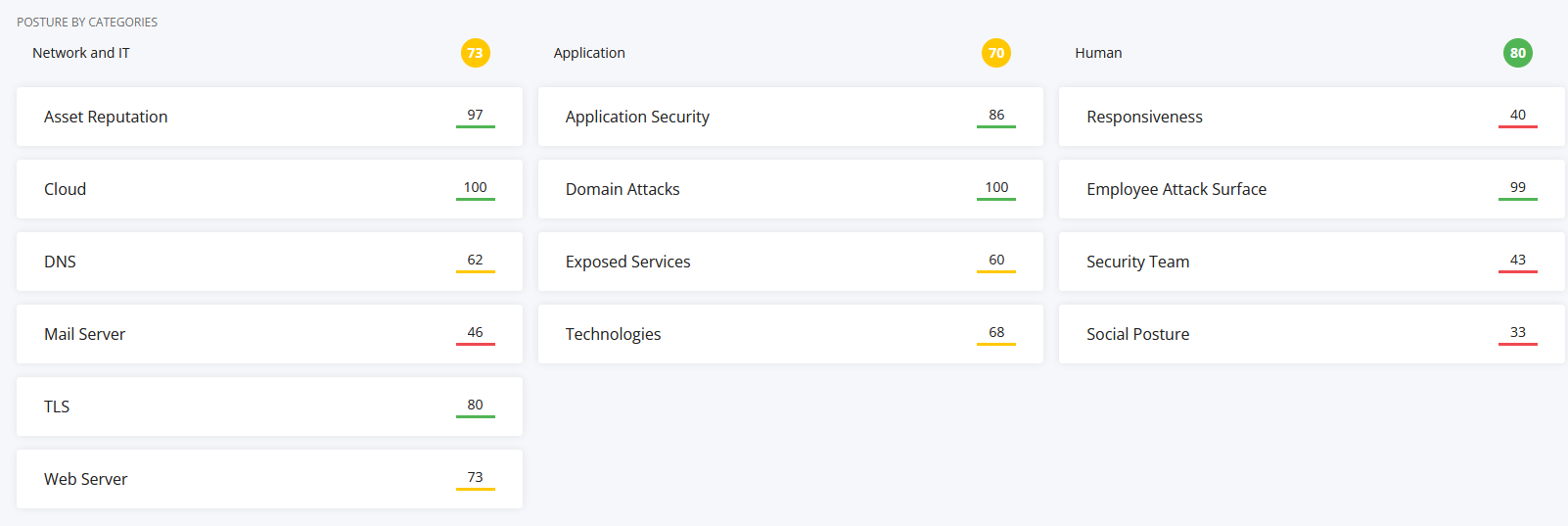

Automated attack surface mapping tools tend to lack a range of capabilities and depth as such it’s my current opinion that:

- They lack business context

- They lack asset context

- They can’t find all the assets

- They don’t show all the vulnerabilities

- The scores generated are based on what appears to be limited rules

- Their ability to conduct subdomain enumeration is lacking

- They rely on data collection methods which have known limitations

Let’s look at an example:

- Asset discovery via automated passive means has a high false positive rate

- Counting “dark web” mentions is an odd metric considering the mentions aren’t contextualised

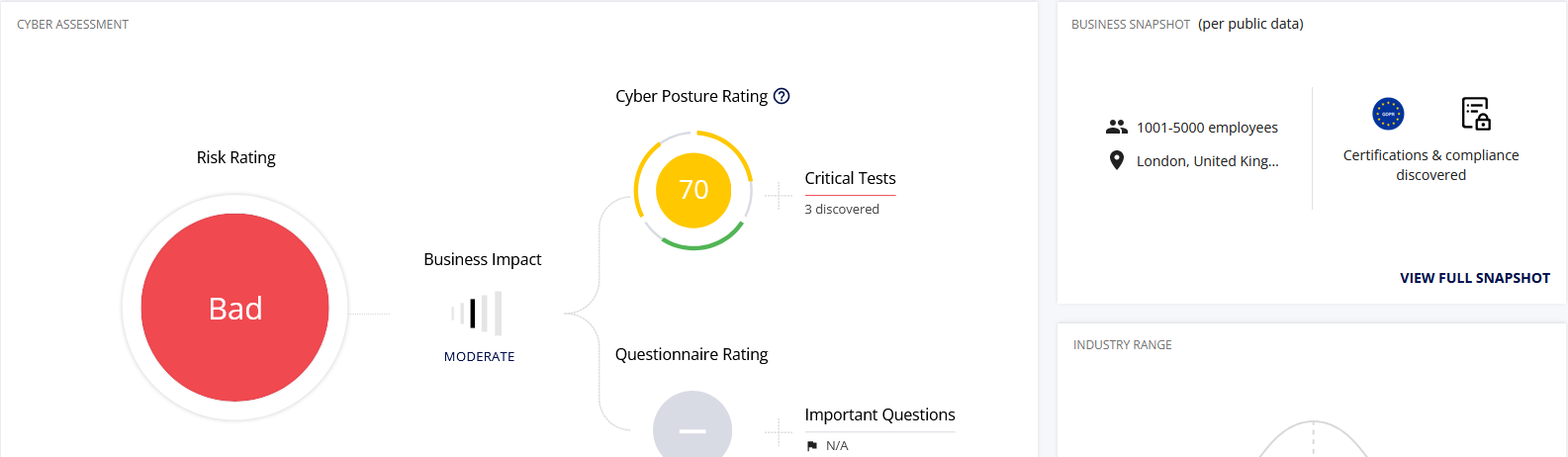

- Categorising metadata is great, except here we have a formula and algorithm interpreting OSINT data and passive asset data to “metricify” a position that is significantly more complex but also the public face in this instance (in my opinion, I have intimate knowledge) just isn’t reflective of reality (in both directions). This tool is painting a too positive picture in some spaces.

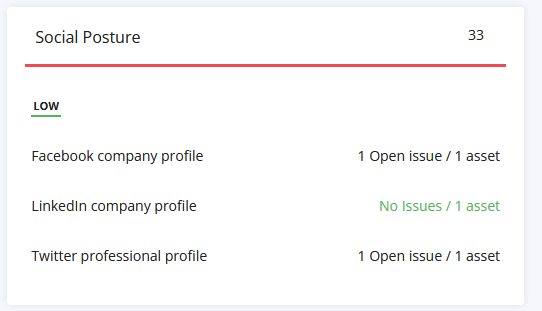

Let’s take Social Posture… a score of 33 (what does this even mean?)

- How does this tool know about all the brands and channels?

- What about all the elements it has missed?

This tool is certainly scraping common data sources such as:

- Shodan/Censys/Binary Edge for asset data

- DNS

- Passive DNS (e.g., Domain Tools/Security Trails etc.)

- Honeypot Data (GreyNoise as an example)

- URL/Domain Reputation Data e.g. (Virus Total/OTX/ AbuseIPDB)

All of which are great data sources, however in my experience you need a human to interpret the data, remove false positives and contextualise it. Sure, assurance platforms allow human input, but they also paint a picture very quickly and with abstraction levels (it appears the more you pay the more you see, shocker that!)

Some technical details

Firstly when we look at a business we have to look at it’s corporate and legal structure, in the UK this involves companies house data and obviously global orgs are more complex to analyse.

- When it comes to internet attack surface we have a range of locations but a starting point is domain identificiation. From here we would then look at subdomains, TLS metadata, IP space etc.

- We would look at DNS data e.g. SPF/DARC and other records

- We would look at historic data

- We would fingerprint web applications and services

- We would look for WAFs or WAF bypasses

- We would look at the suppliers supply chain, we would consider OSINT data as well as metadata from internet footprints.

- We would also want to look at news articles.

- We would look at breach metadata and threat intelligence

Now you might think this is just like using a dashboard… it’s not, the information is specifically reviewed by a human. Even the discovery techniques will be tuned to meet the specific scenario and target organisation, threads will be pulled, metadata analysed, news articles and company intelligence artifacts read. This is not the work for a robot!

The good the bad and the ugly

The good thing is these tools give lots of support for the supplier management and assurance processes, they have a large foundational toolset, they leverage third party data, they let you tag, assign, remediate but also, they have supplier questionaries and engagement toolsets.

However, they also have limitations, both by the nature of activity but also by the subscription plans.

I love these highlight that without “computer hacking skills” you can paint a picture of the attack surface and therefore highlight risk; I do worry however that poorly configured or misunderstood datasets will give the wrong impression. The idea to make everything a “security score” is in my opinion a very security 90s approach, if we look at CESG and the NCSC’s move to principle-based security you have to ask… can security and supplier assurance really be boiled down to a score?